Text-Independent Speaker Recognition Based On Neural Networks Crack Registration Code Free Download [Latest-2022] 🟤

公開日:2022/07/04 / 最終更新日:2022/07/04

Speaker recognition systems employ three styles of spoken input: text-dependent, text-prompted and text-independent. Most speaker verification applications use text-dependent input, which involves selection and enrollment of one or more voice passwords. Text-prompted input is used whenever there is concern of imposters.

The various technologies used to process and store voiceprints includes hidden Markov models, pattern matching algorithms, neural networks, matrix representation and decision trees. Some systems also use “anti-speaker” techniques, such as cohort models, and world models.

Ambient noise levels can impede both collection of the initial and subsequent voice samples. Performance degradation can result from changes in behavioral attributes of the voice and from enrollment using one telephone and verification on another telephone. Voice changes due to aging also need to be addressed by recognition systems. Give this algorithm a try to see what it’s really capable of!

Text-Independent Speaker Recognition Based On Neural Networks Crack+ Free [Latest 2022]

A text-independent system attempts to recognize a voice print as belonging to a particular voice. This system makes no prior assumptions about the user and does not require that the voice be enrolled beforehand. It compares the unknown voice print against a reference database (set of pre-recorded voice prints), after which time it makes a match or no-match decision.

In a text-independent system, the test of an unknown voice print against a reference database is done without first attempting to identify the speaker. Speech recognition is performed on an unknown phrase; if the output is not a match, then the database is re-queried using a smaller window of the original phrase. This process repeats until a match is found or the window of the phrase is so small as to be statistically insignificant. These acoustic-feature models include MFCCs, HOG, etc.

Neural networks are powerful mathematical tools used to represent and learn patterns in data. Generally, they consist of a number of simple processing elements, or nodes, which are interconnected with weights that control the flow of information. The weights are often chosen to optimize the performance of the network, such as the likelihood of the network generating the correct output. The input to the network is usually some type of modifiable vector, e.g., an MFCC or histogram, where the value of each vector entry represents the probability that a certain feature will have a particular value. By “training” the network, it is meant that the correct output is determined for certain inputs. For a given input, the network outputs a set of values that are representative of the probability that a particular output is correct. The overall error of the network is the sum of the error of each node in the network. This loss function is usually represented by a cost function:

F est = ∑ i = 1 n γ i

Text-Independent Speaker Recognition Based On Neural Networks Crack+

1) Single-Channel Input Features:

– Generate the mel-frequency cepstrum (MFC) and/or line spectrum (LS) features

– The MFC feature is extracted using a minimum statistics (MS) technique while the LS feature is generated by a linear predictive coding (LPC) technique

2) Multi-Channel Input Features:

– Generate the mel-frequency cepstrum (MFC) and/or line spectrum (LS) features for each channel

3) Voice Activity Detection:

– The Continuous Time Isolation Neural Network (CTISN) detects the active audio segments.

– This is done by passing the input signal through a series of parallel layers. Each layer feeds the previous layer’s output to the next layer via a series of weighted connections. Each layer’s output is computed using a Linear Sigmoid Unit.

4) Pre-Processing:

– The first step of Pre-Processing involves amplitude normalization of the digitized signal

– Filtering of the pre-processed signal to reduce noise

– A very simple implementation for de-emphasis is employed

– Spectrum extension is performed to detect the active frequency bands and a FFT calculation is performed on the spectrum.

5) Feature Extraction:

– Features are extracted from the active frequency bands. The Mel Frequency Cepstral Coefficients (MFCCs) and Linear Predictive Cepstral Coefficients (LPCCs) are calculated to extract the features for each of the active frequency bands.

– We extract the MFCC features using the GMM mean spectrum for each frequency band.

– We select the best LM based on cross validation

– The resulting mean MFCC vector is used for classification

– We use a Gaussian mixture model for the Mel Spectral Cepstral Coefficients (M Mel coefficients) to generate MFCC features

6) Classifier Architecture

– We use a Hidden Markov Model to classify each input sequence. Each transition is a posteriori associated with a word label

7) System Formulation

– The performance of the system is modeled using Hidden Markov Models

– The EM algorithm is used to learn the transition and emission parameters

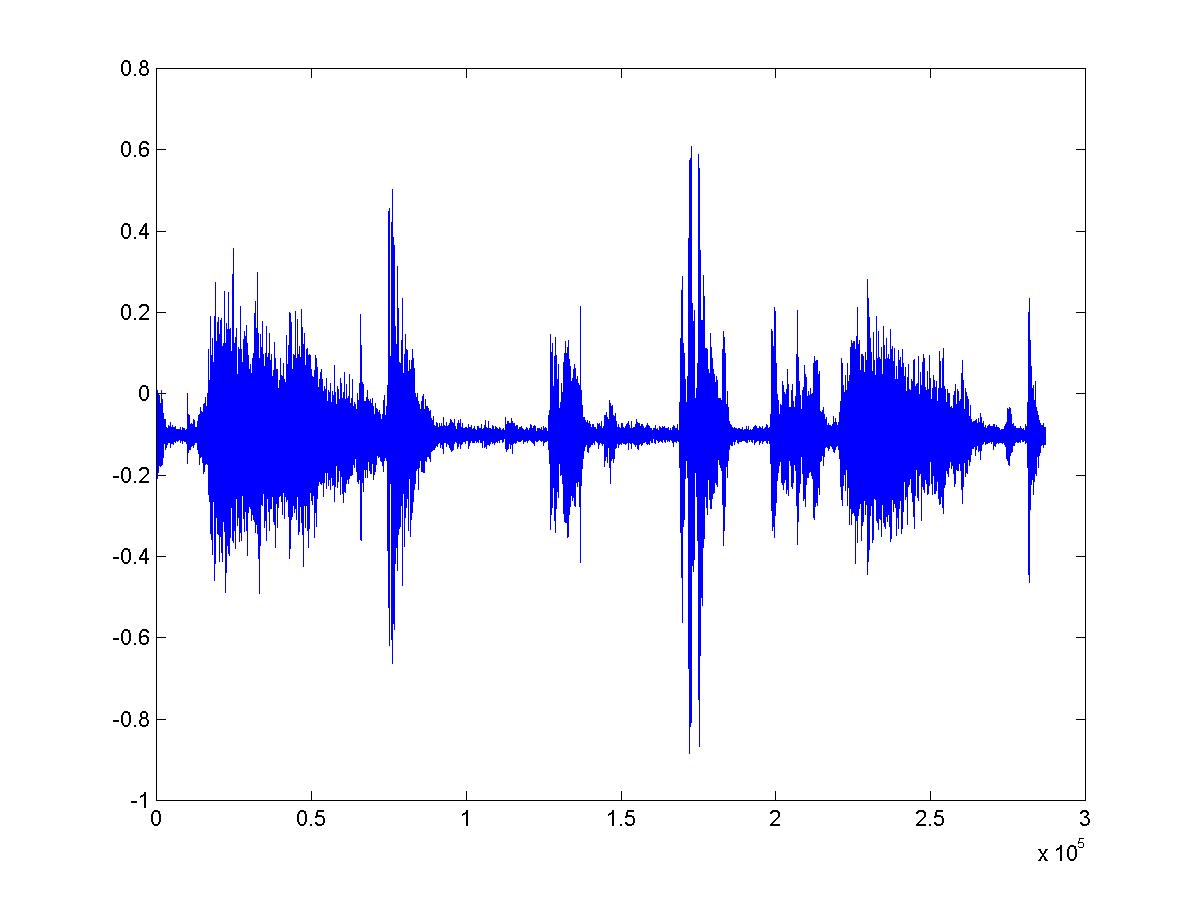

– The overall system architecture is shown in the figure below:

– The web

b7e8fdf5c8

Text-Independent Speaker Recognition Based On Neural Networks Crack+ Torrent (Activation Code)

The Text-Independent Speaker Recognition (TSR) System is based on a neural network architecture which is trained using the Multi-dimensional Voiceprint of a speaker. The neural network structure consists of an input layer, an output layer and a hidden layer. The hidden layer consists of a set of hidden nodes. Each node in the hidden layer consists of an input function, output function, and an activation function. The hidden nodes calculate the output of the input function, and forward their calculated output values through a weighted sum of each hidden node to the output node.

The input nodes are connected to a set of identical input functions, which represent the noisy sample of a speaker’s voice. These input functions have identical connection weights to the output node, and hence, are connected to the hidden nodes in the same order. This network will therefore be called a first layer input network.

For the output layer, there is a single output neuron that models a desired response function for the inputs from the first layer input network. Each hidden node in the hidden layer has an output neuron attached, which models the neuron’s activation function and weights. The activation function models the desired output response function, which is specific to the application.

Each output neuron multiplies the input from the corresponding node in the first layer input network, with the weight and bias (i.e., the output neuron that models the activation function). This weighted sum of activation will be called the hidden node output. The hidden node output is then weighted and summed with the output of all the hidden nodes, using the corresponding output neuron weight and bias. This sum is the output from the output neuron of the network, which models the desired response function. This network is called the second layer output network. The output neuron weight and bias are shared by all output neurons. This network architecture is shown in FIG. 1.

To facilitate a description, FIG. 1 will be considered as defining a neural network for speaker recognition. The network is a hybrid neural network architecture. The input layer is formed by inputting features from the original noisy sample. The output layer is formed by the output for the top layer’s single output neuron.

For the first layer input network, the input node function and weight are shared for all input nodes. For example, given a weight of “W” and a bias of “B”, the input node for the second hidden node would have a weight of “W” and a bias of “B”. Each input node’s weight

What’s New In?

A text-independent speaker recognition system uses a fingerprinting approach and was originally developed by the Defense Advanced Research Projects Agency (DARPA) in the 1980’s. Like the text-dependent approach, the text-independent approach relies on the concept that every person has a set of acoustic characteristics that is unique to him or her, like a fingerprint or DNA sample. The text-independent speaker recognition approach takes advantage of the fact that the characteristics are not only unique to the individual, but also don’t change, even over a relatively short period of time. Other systems have been developed that claim comparable performance to or better performance than this system. There are also several commercially available text-independent systems such as those offered by Novellus, J. MAC, and N. Tel. A text-independent system is capable of determining whether a voice is the same person as another enrolled voice. That is, given enrollment data of a person (known as a “target”), the system can determine if an unknown voice is an impostor.

In a text-independent system, the fingerprints (voice samples) that are created are called “patterns.” A pattern can be thought of as a sequence of numbers, which is interpreted by the system. The system compares an unknown pattern to a list of enrolled or “target” patterns. If the unknown pattern matches the target pattern, the voice sample is determined to be an authentic voice; if not, the voice sample is determined to be an impostor.

The system performs the comparison by using a pattern recognition algorithm. In a straightforward algorithm, the system simply looks at how many numbers match between the unknown pattern and the enrolled patterns. This algorithm is “trivial” because any two patterns are only ever compared to each other.

The current art has used two basic algorithms for pattern recognition: Vector Quantization and Hidden Markov Models.

In the Vector Quantization (VQ) algorithm, the system uses a predetermined codebook of pre-established patterns. The pre-established patterns have a defined meaning (the meaning of the pattern is known as the “code” of the pattern). For example, in the case of a binary VQ algorithm, a codebook of patterns might be: 11010, 10111, 11011, 11101, 11110, and 11111. This codebook defines a pre-established set of patterns. The system will use these pre-established patterns to

System Requirements For Text-Independent Speaker Recognition Based On Neural Networks:

In order to use the required models, the game should be set to the following:

Newly Developed Universe:

.jpg

.xml

.xsm

.xif

.json

Updated Universe

Universe

https://www.sexeib.org/index.php/2022/07/04/deanaglyph-crack-for-windows/

https://romans12-2.org/ezee-graphic-designer-crack-keygen-for-lifetime-latest-2022/

https://swapandsell.net/2022/07/04/community-chat-crack-pc-windows/

https://www.52zhongchou.com/wp-content/uploads/2022/07/fredery.pdf

https://newbothwell.com/2022/07/vitallock-crack-activation-key-for-pc-latest/

http://robinzoniya.ru/?p=24440

https://beznaem.net/wp-content/uploads/2022/07/Live_Midi_Keyboard.pdf

http://www.osremanescentes.com/acervo-teologico/camcap-crack-pc-windows-latest/

https://germanconcept.com/opinio-6-6-1-crack-keygen-full-version-free-download-for-pc-2022-new/

https://www.firstusfinance.com/audiblogs-send-as-podcast-crack-with-license-key-free-download-latest/

https://www.mil-spec-industries.com/system/files/webform/Mov2AVIgui.pdf

https://honors.njit.edu/sites/honors/files/webform/Countdown-Screensaver-and-Desktop-Countdown-formerly-Desktop-Countdown.pdf

https://dorisclothing.com/procrustes-free-2022/

https://dorisclothing.com/swing-testing-toolkit-crack-free-april-2022/

https://wetraveleasy.com/2022/07/04/sage-crack-download-3264bit-final-2022/

https://mohacsihasznos.hu/advert/outlook-express-recovery-crack-keygen-for-lifetime-april-2022/

https://tecnoviolone.com/wp-content/uploads/2022/07/Unblock.pdf

https://ayoikut.com/advert/hostsman-portable-crack-with-full-keygen-updated-2022/

https://www.cameraitacina.com/en/system/files/webform/feedback/aleirebe830.pdf

https://etex.in/byclouder-partition-recovery-enterprise-registration-code-download-3264bit-latest-2022/

「Uncategorized」カテゴリーの関連記事